Simulating Justice: Part 2

Using LLM-driven Simulations to Derive Principles for Governing Emerging Technologies.

Recap

In my last post, First Principles, the State of Nature, and Effective Accountability, I explored tech governance's core challenge: balancing innovation with stability. I proposed devolving power to where meaningful accountability is possible.

But why should anyone care about this arbitrary proposal?

Social Contract thinkers like John Rawls believed that the best way to determine principles for justice is to imagine what principles members of that society would agree to be governed by.

Traditionally, Social Contract philosophers have run these thought experiments inside of their heads.

While compelling, their conclusions remain arbitrary.

I don’t think there’s that much of a difference between “Well I thought about it a lot and I think this is what society should be like” and “Well I thought about what other people would think if they thought about what society should look like and this is what I think they’d think it should be like.”

Is there a way to automate this reasoning such that the conclusions carry more weight? Perhaps, a simulation?

I considered writing an agent-based simulation in Python, but the approach felt limited and fad-ish.

And then I was like oh, why not just use LLMs?

At first I was like okay, maybe you’re just being lazy.

Surprisingly, LLMs' non-deterministic nature and human biases - usually weaknesses for scientific inquiry - are strengths for this use case.

But why trust a single model when different AI companies might encode different principles of justice?

So I ran two experiments:

I built a custom application to model what principle rational agents would select for governing emerging tech.

I asked Claude.

Simulating the State of Nature with Aldea

First, I decided to build an app.

That’s not quite accurate; Actually, I'd been kicking around an app to help consultants simulate complex brainstorming, and this seemed perfect for testing.

So here’s how Aldea (Spanish for 'small, serene town') works

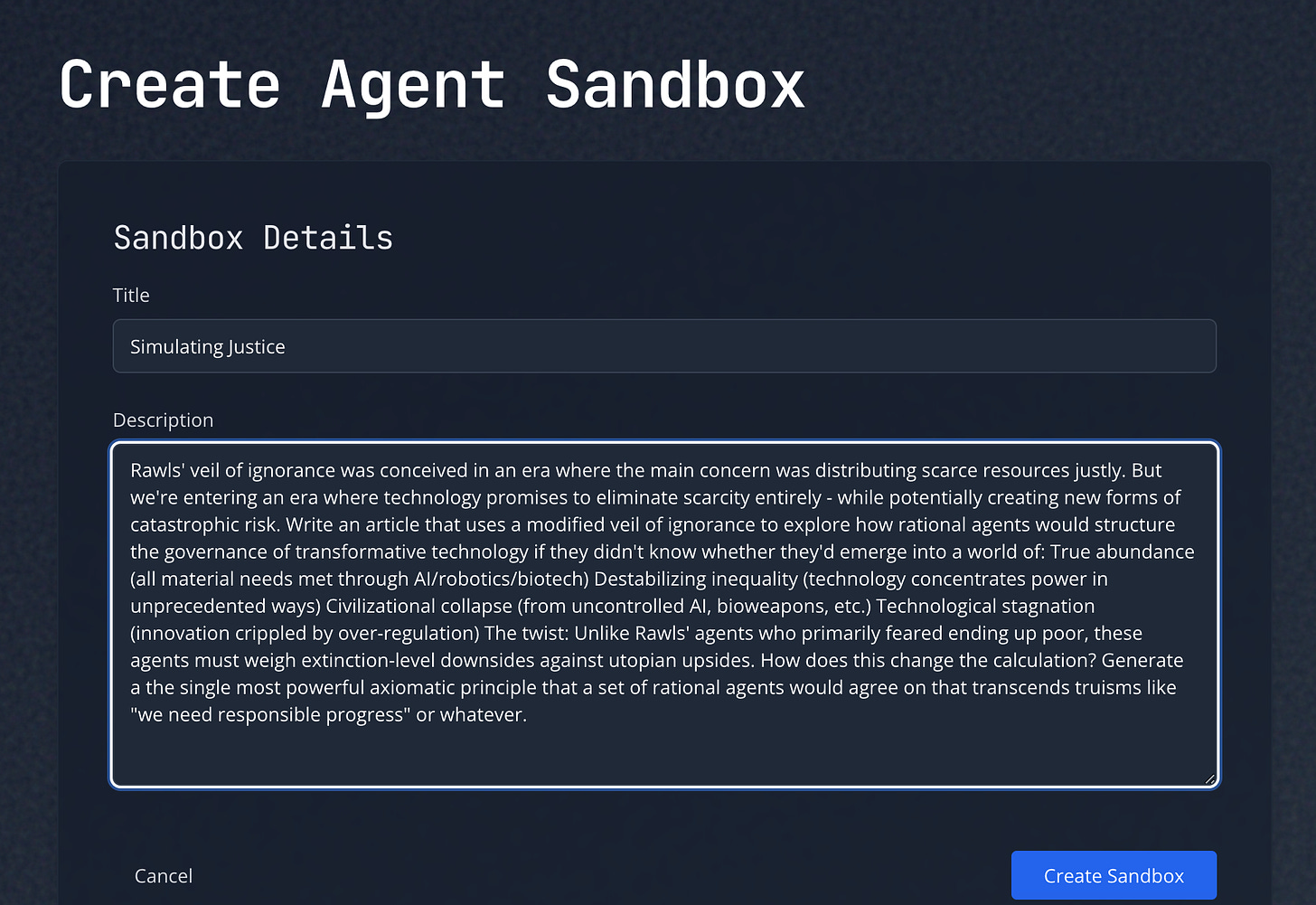

Define the scenario

First, I defined the scenario: what principles would a set of rational agents select for governing emerging tech, where, “unlike Rawls’ agents who primarily feared ending up poor, agents must weigh extinction-level downsides against utopian upsides?”

Configure agents

In Rawls’ “veil of ignorance” thought experiment agents don’t know what their position in society will be after agreement is reached, which is supposed to encourage agents to negotiate in good faith.

However, for our purposes, we need something to distinguish the agents from each other — otherwise why bother having multiple agents deliberate?

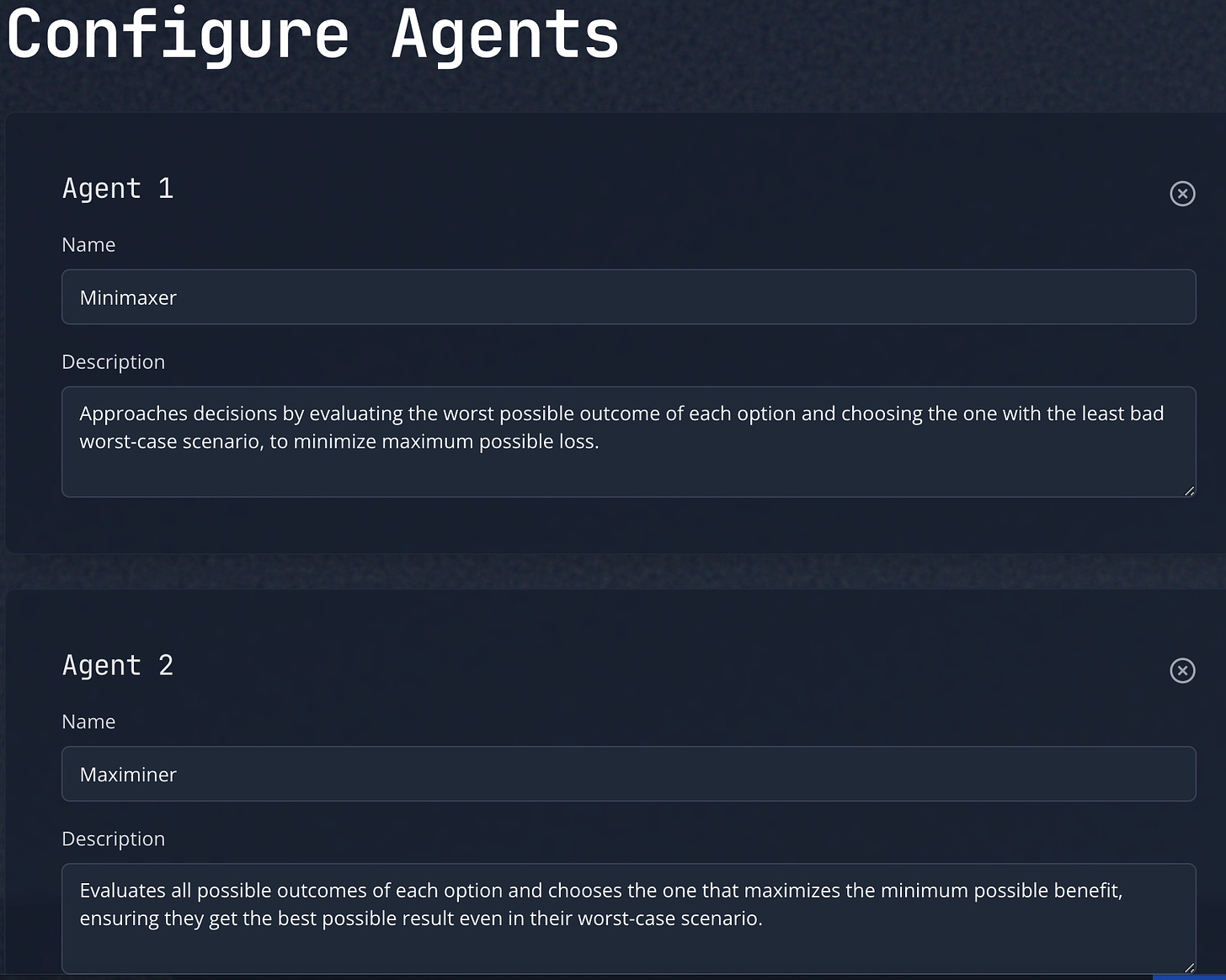

I felt like the most relevant non socio-economic characteristic to consider was psychological approaches to risk management, so I defined agents with classic risk management strategies.

For instance, some people are minimaxers, or people who select the option that minimizes the likelihood of the worst possible outcome. Others are maximiners, who select the option that maximizes their minimum possible benefit.

Run it

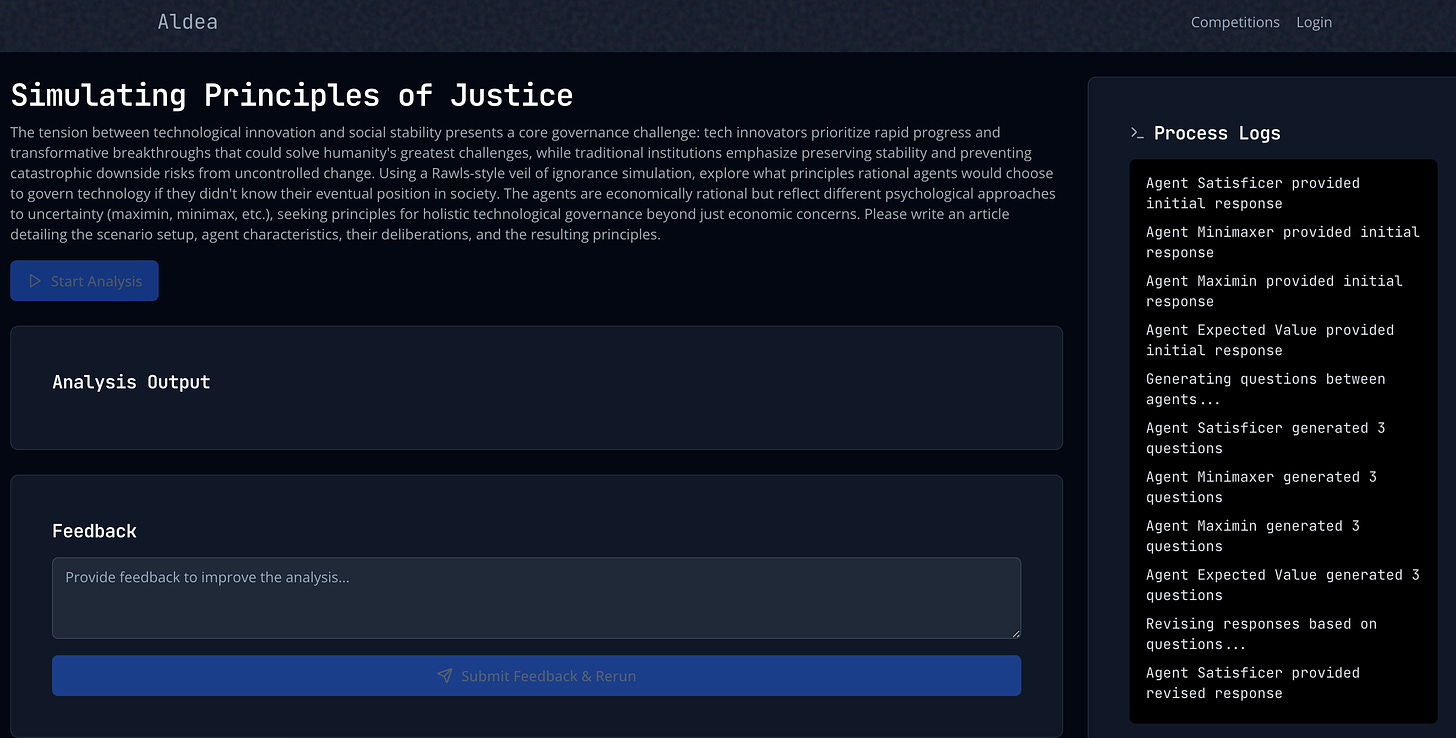

The application took the agents through a deliberative process:

Initial proposals

Each agent (gpt-4o) generated a response to the prompt guided by their pyschological profile.

Question exchanging

Each agent reviewed the other agent’s responses and asked questions to challenge assumptions and call out lazy reasoning.

Proposal revision

Each agent revised their initial response to address the questions they received.

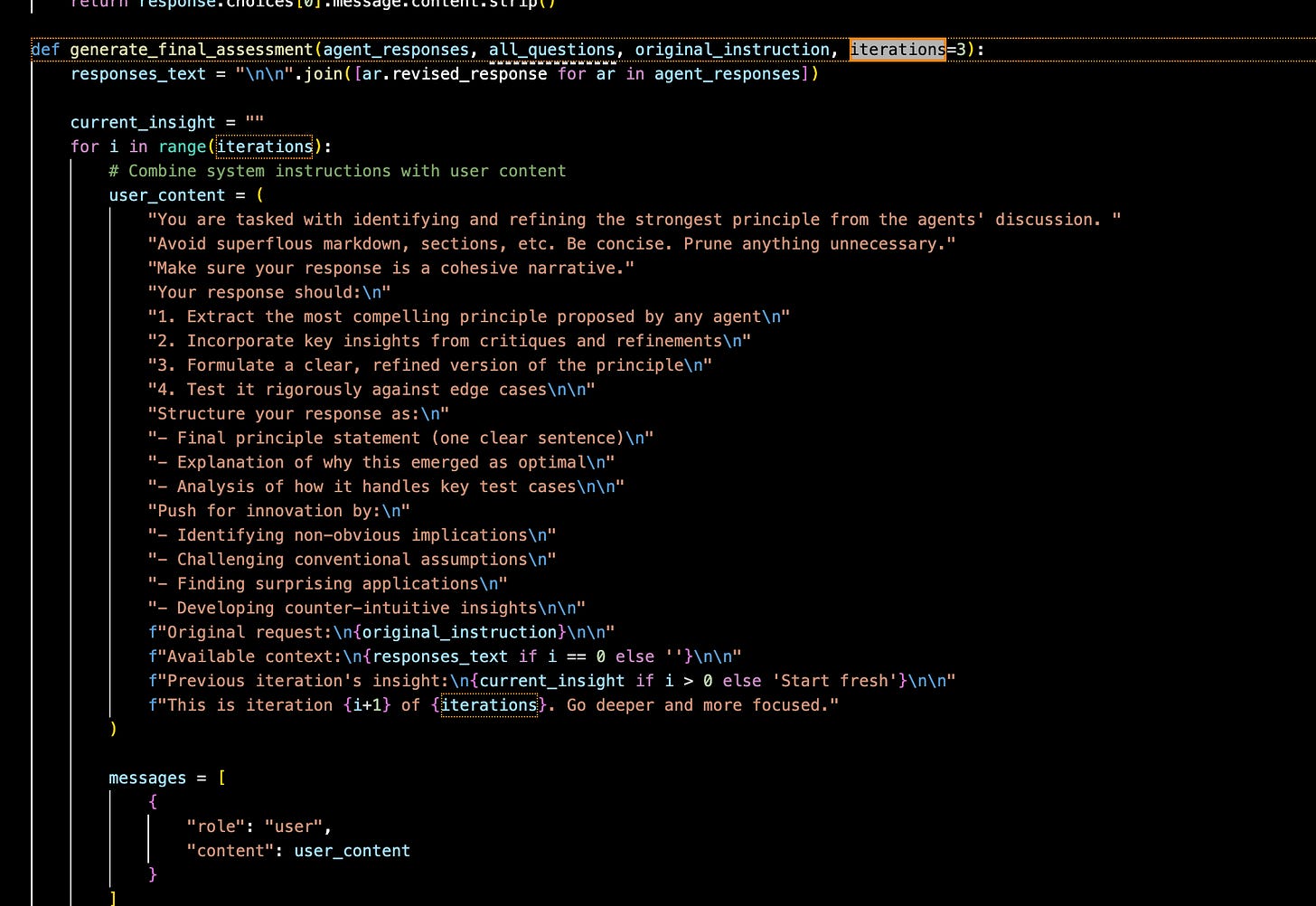

Final assessment

A separate agent (o1-preview) reviewed everything and generated a final assessment reflecting consensus between the agents. Notably, this agent has a tuneable parameter named iterations to simulate depth of thought:

Higher iteration counts prompted the agent to think progressively deeper, with each pass building on the previous analysis.

Iteration 1: The Resilience Principle

The first set of deliberations landed on “The Resilience Principle.”

The Resilience Principle offers a balanced approach to governing technology amid uncertainty. By fostering adaptability, ensuring equity, and enabling robust responses, it prepares society to navigate the complexities of technological change without knowing individual future positions.

In a world where the only constant is change, resilience becomes not just a desirable trait but a necessary foundation for a just and thriving society. The Resilience Principle encapsulates this necessity, providing a guiding axiom for technological governance that safeguards society while unlocking technology's full potential."

Adoption of this principle requires:

Commitment to Education: Preparing individuals to thrive in changing technological landscapes

Institutional Reform: Developing governance structures that are both protective and enabling

Cultural Shift: Embracing a mindset open to change, learning, and inclusive participation

Plausible, but tepid.

Iteration 2: Flexibile Adaptability

Conventional wisdom suggests a cautious approach: regulate heavily to prevent catastrophic outcomes. Yet, this impulse may be as perilous as reckless advancement. The counterintuitive insight emerges that in the face of such profound uncertainty, rigid strategies—be they conservative or progressive—are inherently flawed. Instead, rational agents might advocate for a governance model rooted in flexible adaptability, paradoxically embracing uncertainty as the most stable foundation.

Pursuant to this principle, “uncertainty is not merely an obstacle to overcome but an element to integrate. By embedding uncertainty into governance structures, society becomes inherently equipped to pivot in response to the unknown.”

This approach reminded me of fasting and autophagy, or the idea that people can reduce the risk of cancer and other chronic diseases by fasting to prompt autophagy, which hastens the body’s process for killing aging cells and replacing them with new ones.

Iteration 2 proposed advancing Flexible Adaptability by “Institutionalizing Ephermalism” and “Mandatory Uncertainty Injection.”

Principle 1: Institutionalizing Ephemeralism

In a world where change is the only constant, permanent solutions become liabilities. The agents might propose ephemeral institutions—governing bodies and regulations intentionally designed to be transient.

Sunset Clauses on Legislation: Laws automatically expire unless actively renewed, ensuring continual reassessment in light of new developments.

Agile Regulatory Frameworks: Regulations that can be rapidly modified or repealed in response to technological shifts.

By accepting impermanence, society remains nimble, reducing the risk of outdated policies obstructing progress or failing to mitigate new threats.

Principle 2: Mandatory Uncertainty Injection

To prevent overconfidence in any single trajectory, agents could mandate systemic uncertainty within decision-making processes.

Rotating Leadership Roles: Regularly changing those in positions of power to prevent entrenched interests and introduce fresh perspectives.

Deliberate Dissent Mechanisms: Institutions require the inclusion of contrarian views, with devil's advocates embedded in critical discussions.

This enforced plurality guards against blind spots and echo chambers, fostering a culture where questioning is institutionalized.

Iteration 3: Halt Innovation to Save Society

So Iteration 1 said we should promote resilience to weather uncertainty. Iteration 2 argued for embedding chaos in our institutions to promote resilience.

Iteration 3 eschews “resilience” in the face of innovation for ending innovation to assure security.

Rational agents, surveying this treacherous landscape from behind their veil of ignorance, would recognize that the asymmetry of outcomes necessitates a zero-risk tolerance for existential threats…Given this paradox, rational agents would logically opt for a global moratorium on certain forms of technological development. This moratorium would not be a temporary pause but a sustained effort to limit innovation in areas where the risks cannot be fully mitigated.

This is not a call for cautious advancement or better regulatory oversight. It is a provocative assertion that the rational choice is to prioritize survival over the pursuit of progress, even if it means sacrificing the very freedoms and curiosities that define us as a species. Such a stance challenges the bedrock of contemporary thought—the unwavering belief in progress as an inherent good—and proposes a counterintuitive strategy: intentional technological stagnation as the most rational means of ensuring human survival.

whoa.

The most significant policy proposal? Prohibit research into AGI.

Iteration 3 goes on to provide a litany of risks concomitant with prohibiting innovation.

The Opportunity Cost of Stagnation: Weighing Potential Losses

While prioritizing survival is rational, it carries significant opportunity costs.

Moral Responsibility: By halting innovation, humanity might fail to alleviate suffering that could have been mitigated through technological advancements, raising ethical concerns.

Intergenerational Tensions: Future generations may question or resent the limitations imposed, leading to societal fractures or eventual rebellion against the constraints.

Black Swan Vulnerabilities: Unexpected threats not currently understood could emerge, and without ongoing innovation, humanity might lack the tools to respond effectively.

The Psychological Burden: Living Under Constant Existential Threat

The awareness of looming existential risks, coupled with the suppression of progress, could impose a significant **psychological burden on societies**.

Anxiety and Fatalism: Populations might experience heightened anxiety, depression, or fatalistic attitudes, impacting mental health and social cohesion.

Cultural Stagnation: Art, literature, and cultural expressions may become monotonous or nihilistic, reflecting the suppression of new ideas and possibilities.

Resurgence of Dogmatic Beliefs: In the absence of progress, there may be a turn towards extremism or dogmatic ideologies as outlets for frustration or as alternative sources of meaning.

The underlying psychological theme is that we, as humans, view ourselves as unique because we are “tool building creatures.”

We increasingly measure our worth through technological accomplishments.

Without technological progress who are we and where does our value come from? The radical proposition to intentionally constrain or halt transformative innovation challenges the core of human ambition.

Yet, when viewed through the lens of rational agents grappling with existential uncertainty, it emerges as the logical path toward ensuring the survival of humanity.

This strategy demands immense discipline and a willingness to sacrifice deeply ingrained values—freedom, progress, and sovereignty—for the non-negotiable priority of existence. It is a call to reimagine what it means to thrive as a species, shifting from an outward quest for dominance over nature to an inward focus on preservation and cautious stewardship.

Iteration 3 requires humans completely redefine their conception of self and self-worth. We’d have to go from “we are valuable because we build things” to “we are valuable because we exist.”

I wonder how many people will follow my emotional journey to this iteration, starting with “lol banning innovation? sounds dumb” to “hm, I also have been spending the last couple years questioning whether I might be happier if I learned how to derive a sense of worth outside of my professional accomplishments, maybe I should keep an open mind.”

Iteration 3 provides sketches of a pathway towards affecting that reconception of worth:

Redefinition of Progress: Progress would no longer be measured by technological advancement but by the longevity and stability of human society. Success becomes synonymous with survival rather than innovation.

Ethical Realignment: Ethical frameworks shift from prioritizing individual rights and freedoms to collective responsibility and caution. The moral imperative becomes the prevention of existential risk above all else.

Societal Transformation: Education, culture, and politics pivot to support a survival-centric paradigm, fostering a global ethos of restraint and vigilance.

Iteration 3 also doesn’t eschew innovation completely or permanently.

Given the severe consequences of both action and inaction, rational agents might explore creating a controlled environment for safe innovation, though this presents its own challenges.

Innovation Sanctuaries: Designated areas or institutions where controlled innovation is allowed under strict oversight, possibly rekindling inequities or elite privileges.

Adaptive Moratoriums: Periodic reassessment of risks allowing for cautious advancement in response to new information, requiring robust predictive and governance mechanisms.

Evolution of Values: Society may develop new values prioritizing adaptability and resilience, fostering a culture capable of navigating between stagnation and reckless progress.

Lots of ideas. Much to process.

That was a lot

During breaks debugging Aldea (my app) I tried a similar approach with Claude.

Iteration 1: Give Claude the same prompt I gave Aldea.

Iteration 2: Give Claude the same prompt I gave Aldea and prompt for additional specificity.

In Part III of this series I’ll share which principles Claude generated for governing emerging technologies.

In Part IV I’ll explore how crypto might be embedded into the design and deployment of other emerging technologies to maximize equity and reduce existential risk.

I’ll also share an invite to Aldea, so you can do your own agent-driven brainstorms.